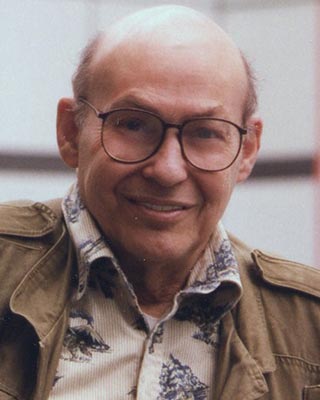

Marvin Minsky, a forefather of artificial intelligence.

(Google images)

My interest in Artificial Intelligence (AI) began as an undergraduate in the late 1960s. There, at MIT, I had an amazing lecturer on the subject, Marvin Minsky, who is regarded as one of the three great fathers of AI (see photographs).

For 40 years, there was little progress in the field, except that the concept that has been exploited by well-known movies (“2001: A Space Odyssey,” “Ex Machina,” “The Matrix,” etc.). About 10 years ago, some of my ex-fellows and colleagues and I proposed a project, which we published, using machine learning (a subset of AI) to test out my standard teaching paradigm that involved 10 signs of disc edema.

A total of 122 patients with disc edema were hospitalized for a battery of tests, including MRI and lumbar puncture, leading to a final determination of papilledema vs. pseudo papilledema. Such a project was only possible in a country like Italy, where state sponsored socialized medicine covered the costs. It turns out that only four (e.g. swelling of the retinal nerve fiber layer) of the 10 signs were critical. I was impressed that AI could objectively evaluate and rank the importance of each sign of disc edema. I was also impressed that off-the-shelf software could now be tweaked for an AI algorithm that we could actually use for this study. In short, the revolution had finally come.

What is AI?

Norbert Wiener and John von Neumann. (Amazon images)

The movies get it a little right, but mostly they get AI wrong. The movies exaggerate the robotics part and miss the AI learning part. AI can mean many things. I will use, in this essay, the specific concept of machine learning by neural networks. In this way, a machine learns, not from a logical algorithm, but from its experience.

Such machine learning can use neural networks for “unsupervised training” for general AI. A neural network can have many hidden layers and thus be remarkably complicated. It can have feedback, recurrent, feedforward and other types of loops. In essence, the strength of each connectivity is enhanced by the training set of experience.

Thus, it continually maximizes its chances of interpreting inputs usefully. This is akin to positive reinforcement (as when a Pavlovian dog whose hearing is enhanced to the ringing of bells anticipates food) and negative reinforcement that can convey a negative relationship (as when a child touches a hot stove), but at a very deep level that is essentially “a black box.”

Accordingly, machine learning is not that different from animals and children who use their neural network (brains) for learning. However, it’s fundamentally different from adults sitting in a classroom with pencils and pads who apply logic and formulas and, ironically, it is the opposite of a human written computer program.

AI needs input. Lots of input, provided in large training sets. Since AI relies so heavily on having huge amounts of data, it works quite well with images that can convey a lot of information. It is the opposite of deductive reasoning and thus must depend on massive amounts of good data to be accurate.

Minsky often lectured that we had to be careful about suitcase words. A suitcase word carries a variety of meanings packed into it. When you use the words, “intelligence,” “learning,” or “understanding,” you probably mean different things at different times. If we have a discussion using these words, we might assume we understand each other, when we really do not. When dealing with AI, it is better to use words like “recognize,” “identify” or “predict.” These are clearer terms that are more invariant, and they can be readily quantified.

AI can be trained to recognize a cat from a dog by simply exposing the neural network to hundreds of thousands of images of cats and dogs. It then will correctly identify new images of cats and dogs even if some of the images have hidden elements, like tails and legs, or are of different sizes or perspectives or just sketches and cartoons. In the end, it is much like my 4-year-old granddaughter who uses pattern recognition to correctly classify an image of a cat or dog, but can’t explain to me how she does it.

What AI is Not

I like to tease my non-ophthalmology physician colleagues at lunch by asking them a version of, “What will you do when AI comes for you?” Their answers are unsettling as they reflect two things: 1) A profound lack of understanding of what AI is, and, 2) a prejudice that comes from their recent bad experiences with computers in the form of electronic medical records (EMR).

Most of my physician friends think that AI means powerful computers. What they know of fast computers is usually in the form of recently acquired computer interfacing for EMR. They see that the computer interface prompts them with lots of questions, which they find both intrusive and frustrating since often these questions are for regulatory and billing issues. The computer prompts often do not advance the cause of diagnosis or patient management. My physician friends see the computers as more of an obstacle to patient care though a boon to maximized billing. The “intelligence” they see in the computer, is in its comprehensiveness; it does not miss a trick for maximal billing. They do not appreciate the difference, in kind, of what AI can do.

Then I hear this common answer: “Computers won’t replace me”, each says, “because AI can’t capture all the nuances of the diagnostic process.” Alternatively, they say, “Computers can’t administer the kindness and compassion that we, human doctors, provide.” They are thinking how EMR gets between the physician and his/her patients. And that’s true, as I have had to remind my residents and fellows to look up from the computer and make eye contact with our patients; but this has nothing to do with the limits of AI. This impediment can be easily bypassed by the application of systems that are more sophisticated or non-physician human contact.

So, AI is not a better EMR. It is not a robot. It is not a fast computer that was programmed by a smart software engineer who was taking advice from a physician at his side who was explaining how to make a differential diagnosis. General AI is completely different. It is autonomous. It feeds on data. And it keeps getting better, by itself.

Examples of What AI Can Do.

A recent project you may have heard of, as it’s ophthalmological, was done in concert with Google. Ophthalmologists were asked, “Is there a difference in the appearance of a healthy retina between men and women?” We all said, “No.”

Then hundreds of thousands of images of the back of the eye were provided to image-analyzing computers together with the notation as to which were from men and which from women. This was used as a “training set.” Using a neural network system of learning, the AI system was asked to predict whether new images were from men or women. After several iterations, it got really good at that. So now, AI could do what humans could never do, and do it with up to 97% accuracy!

How did AI do this? We don’t know. The problem is that we can’t just ask the computer what it was thinking. We can’t dissect and examine the AI process because there was no underlying logic. But there are ways to “interrogate” the “black box.” This can be done by masking portions of the fundus image. The AI did well when the mask blocked out various parts of the retina except for those involving the fovea. From this, we know that something about the appearance of the fovea was critical and necessary.

Remarkably, ophthalmologists, even knowing that the gender difference was in the fovea, still do not see any differences between the retinas from men and women. This is both frustrating and amazing. It’s frustrating, since this AI research has not really revealed new scientific truths about gender and foveas. It’s amazing, since for the first time, computers, can do what no ophthalmologist on earth can do by accurately identifying gender from retinal images alone. Imagine what else is hidden from our view, but available for AI to recognize.

Why This Is Fundamentally Different, in Kind as Well as Speed

Sam Harris has a wonderful TED talk titled, “Can we build AI without losing control over it?” Of his many good points, the core one is this. The speed of AI is a game changer by itself. This is partly because AI builds itself. To have a system a little faster than the other AI systems means that soon it is much faster than the competitors are. This concept has not been lost on industry or nations. It has been estimated that whoever takes the lead will leapfrog beyond the others, and not by a little.

Electronic circuits work about 1 million times faster than human circuits. So, an AI system just as smart as very good scientists, might, in a few days, make the same progress as the best scientists at Stanford and MIT could make in 20,000 years. AI will soon be changing the game in extremely short periods, with no time to make human-friendly adjustments, unless these too were built into the AI.

However, there is another fundamental difference between AI and how humans have been making progress in the last 10,000 years. AI is unconstrained by logical paradigms. It’s not even fuzzy logic, it’s just completely results driven. This is faster, more powerful, and less predictable, than logic. And less accountable as well.

What This Means to Ophthalmology

We will be at the forefront. Already deep leaning techniques of AI have demonstrated that fundus photographs can be used to estimate HbA1c values (+/- .35%). We do not know how, but it’s not the blood vessels. AI can be used on fundus photographs to estimate the patient’s age (more likely their biological age).

In Singapore (see works of Ting, Wong and Milea), where the state owns the fundus photographs of most patients, this can be used to predict, with remarkable accuracy, who will be having strokes and heart attacks in the subsequent year. Likely, the eye is just a convenient access point to visualizing the human circulation in vivo. I think we are only a couple of years from anticipating stroke, and neurodegenerative diseases through fundus photographs or OCT.

Of course, we are now capable of using such AI technology to follow age related macular degeneration, glaucoma and other optic neuropathies for grading disease as well as for screening. Moreover, the grading will become the basis of Food and Drug Administration-approved new treatments and we, as ophthalmologists, accepting new treatment protocols. As we ophthalmologists acquire so many high resolution and data rich images, we may be at the lead with AI in medicine. As it is, most of our great imaging data now just goes to waste.

What This Means to Medicine

This major revolution is coming to medicine and healthcare. In addition to image analysis, medicine will use the rich data sets available through EMR and consortiums such as the Academy’s IRIS® Registry and medical records shared by large universities and integrated health care systems such as Kaiser Permanente.

AI will be the standard for screening, diagnosis, prediction and monitoring of disease progress against management strategies (including a comparison of providers). With large data sets, AI will correctly put people into bins based not on clinical diagnosis, but based on unexpected features that identifies those most likely to benefit from specific therapies. This is entirely different from what doctors presently do. There will be less understanding, less insight, less logic, less teaching, and less elegance.

Nevertheless, AI is likely to be more effective, more encompassing and certainly faster and more efficient, at managing most patients. The first medical fields to go will probably be those that rely most on data-rich images (likely order: radiology, dermatology, pathology and ophthalmology). My personal teaching style has always emphasized the careful observation of signs and symptoms to set up a hypothesis that can be tested with clever questions and physical testing, as a cornerstone of neuro-ophthalmology. It will soon become obsolete and will lose out to AI pattern recognition, at least for common disorders.

What AI Means to Society

Science fiction is about to become reality. “Computers are like humans, they do everything except think”; John von Neumann, 1956. Intelligence has always driven change. Artificial intelligence will drive change faster than we can imagine. It is silly to say that the machines will be “smarter than we are.”

“Smart” is a suitcase word. But for sure, they will be much, much faster, more competent and with broader horizons. It is humanly impossible, to anticipate where this will be going. The industrial revolution changed our society, our world and our lives, in profound ways. The economic and political order changed dramatically in about two hundred years. AI will do the same, in much less time. Buckle your seat belts.

On the other hand, AI can solve problems that humans cannot and, in many cases, problems that we do not even think are solvable. Creativity is intelligence applied in unexpected directions, and we should not be surprised when AI identifies as well as solves crises. Our planet is facing some serious challenges, and AI may be the thing that can save us. We might as well try and be part of this solution.